Introduction to Can Apache Superset Generate Synthetic data

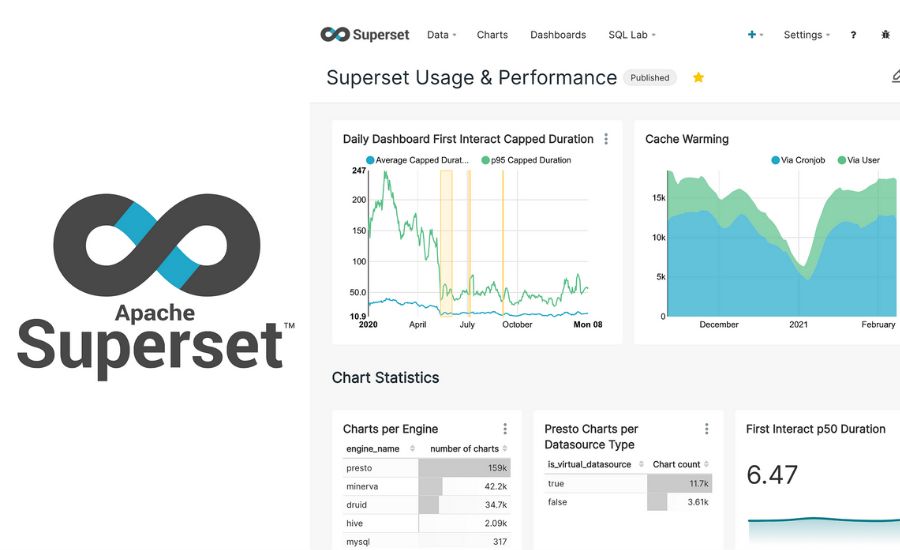

One of the most not unusual frustrations customers revel in with Business Intelligence (BI) tools is sluggish dashboard performance. No depend how visually appealing or well-organized a dashboard is, it quickly becomes ineffective if charts take too long to load. Speed is a vital thing in preserving a easy user experience, and delays of a number seconds can appreciably impact productiveness.

Research courting returned to the Nineteen Sixties, performed by using IBM, shows that customers expect interactive structures to reply within approximately three.14 seconds—a time frame humorously known as a “nanocentury.” If a BI dashboard takes longer than this to display outcomes, customers might also abandon it in want of opportunity strategies, rendering the device ineffective.

The Challenge: Processing Massive Datasets Quickly

BI dashboards are regularly powered via complex queries strolling on massive datasets, every now and then related to thousands and thousands or maybe billions of rows. The sheer volume of facts can create bottlenecks, main to gradual response instances. However, modern optimization strategies can considerably decorate dashboard overall performance, making it viable to attain load times of beneath four seconds—in spite of huge-scale records.

To understand how to achieve optimal performance, let’s first examine the lifecycle of a BI dashboard, starting from the initial SQL queries that generate the visualizations.

Steps to Improve BI Dashboard Performance

Optimized SQL Queries

Writing efficient queries is the foundation of fast dashboards. Avoiding unnecessary joins, using indexed columns, and limiting data retrieval to only what is needed can drastically reduce query execution time.

Pre-Aggregated Data & Materialized Views

Instead of computing metrics on the fly, pre-aggregating data into summary tables or materialized views allows dashboards to fetch results instantly rather than processing raw data every time.

Caching Mechanisms

Implementing caching strategies at the database or BI tool level reduces redundant query execution. Frequently accessed queries can be stored and quickly retrieved instead of recalculated each time.

Columnar Databases

Traditional row-based databases can slow down analytical queries. Using a columnar database, such as Amazon Redshift or Google BigQuery, improves performance when dealing with large-scale analytical workloads.

Asynchronous Loading & Lazy Loading

Instead of making users wait for the entire dashboard to load at once, prioritize critical charts first while loading secondary elements in the background.

Optimized Data Models

Structuring data efficiently using star or snowflake schemas allows for faster querying by minimizing redundant computations and unnecessary joins.

ETL Pipeline Improvements

Ensuring Extract, Transform, Load (ETL) processes are well-optimized can reduce data processing delays. Proper indexing, partitioning, and using efficient data formats (e.g., Parquet or ORC) can enhance retrieval speeds.

The lifecycle of a dashboard

Exploring Data Visualization: From Raw Data to Actionable Insights

Data visualization often starts with an exploratory segment, where analysts are trying to find to uncover significant insights from raw data. At this degree, they’ll no longer but know the exact data they need to give. A commonplace approach includes working with datasets inclusive of an orders table, which incorporates statistics of all merchandise bought by a enterprise.

Step 1: Establishing a Baseline Metric

A logical start line for evaluation is to observe ordinary sales quantity. Running an initial SQL query to compute total revenue and the quantity of sales can offer a high-level overview of business overall performance. While this offers a large angle, it lacks context—it does now not imply whether or not sales are increasing or reducing over the years.

Step 2: Identifying Trends with a Time Series

To advantage deeper insights, analysts often create a time collection that tracks income developments daily, weekly, or month-to-month. By breaking sales records down into time periods, we can identify styles, consisting of seasonal fluctuations or durations of high demand. This facilitates selection-makers understand whether or not income are trending upwards or downwards.

However, even with this improvement, the data may still lack actionable insights. If sales are declining, businesses need to understand the underlying reasons. Is the decline specific to a certain region? Is it related to a particular customer demographic? Are sales fluctuating based on the platform used for purchases (e.g., mobile vs. desktop)?

Step 3: Adding Dimensions for Deeper Analysis

To address these questions, analysts introduce dimensions into their queries. Dimensions are categorical variables that help break down metrics for deeper analysis. For example, refining the query to include factors like order_day, market_segment, and country provides a clearer picture of sales patterns across different customer groups and geographic regions.

By incorporating multiple dimensions, businesses can build dynamic dashboards that offer both a macro and micro-level view of sales performance. In real-world scenarios, analysts often add even more dimensions to ensure that stakeholders have the flexibility to explore data from multiple angles.

Best Practices for Building an Effective Dashboard

Use Aggregations for Metrics: Key performance indicators (KPIs) such as total sales (SUM) and order count (COUNT) should be calculated using aggregate functions.

Choose Low-Cardinality Dimensions: Columns included in the GROUP BY clause should have a manageable number of unique values to optimize query performance.

Consider Future Use Cases: Analysts should anticipate future reporting needs by including relevant metrics and dimensions in the dataset. Some companies maintain denormalized tables with hundreds of columns to ensure flexibility in analysis.

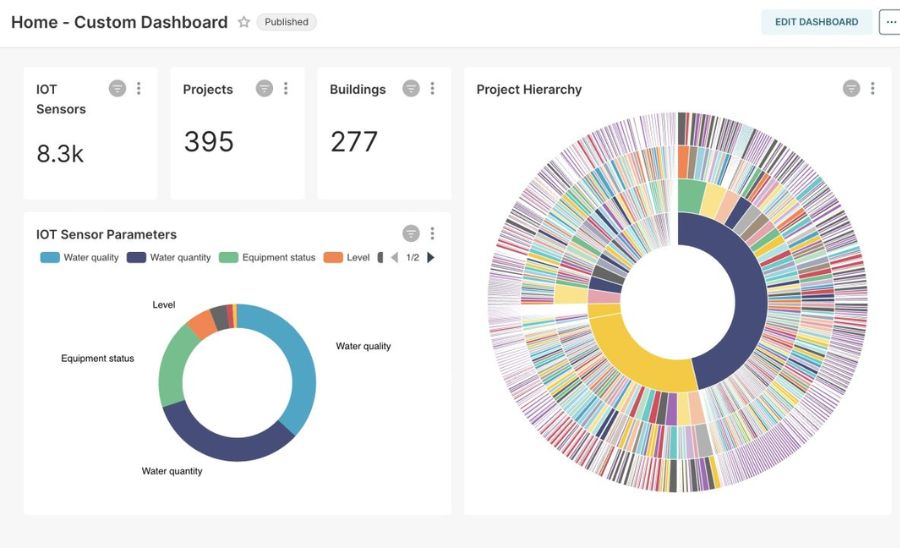

Preparing Data for Apache Superset

Once the data is structured with the right mix of metrics and dimensions, it can be loaded into a BI tool like Apache Superset. This allows users to slice and dice the records interactively, filtering through precise criteria to discover actionable insights. A well-designed dashboard enables organizations to make records-pushed decisions fast and effectively.

By following those ideas, groups can transform uncooked information right into a powerful useful resource for strategic planning and overall performance optimization.

Building the dashboard

Setting Up a Data Warehouse for Dashboard Optimization

Before building a dashboard, the first step is ensuring we have the necessary data in place. In this guide, we will use Trino, a powerful distributed SQL engine, as our virtual data warehouse. Trino allows us to query vast amounts of data efficiently, making it an excellent choice for handling analytical workloads.

To set up Trino quickly, we can use Can Apache Superset Generate Synthetic data, which simplifies the deployment process. A minimal docker-compose.yml file can be used to spin up Trino in just a few steps. If you want to follow along with this setup, all the required files are available in the official repository: GitHub – Preset-io/Dashboard-Optimization.

Connecting to the Right Data Source

Once Trino is up and running, we need to connect it to a data source. In this case, we are using the TPC-H benchmark, a widely used standard for evaluating decision support systems. The TPC-H dataset includes multiple tables that simulate real-world business scenarios, making it ideal for testing dashboard performance.

TPC-H provides schemas of different sizes to suit various computational needs:

- sf1 (Scale Factor 1) – Represents approximately 1GB of data.

- sf100 (Scale Factor 100) – Contains about 100GB of data.

- sf1000 (Scale Factor 1000) – A much larger dataset, approximately 1TB in size.

The sf100 schema is selected for this tutorial because it strikes a balance between performance challenges and system requirements. It contains enough data to highlight optimization issues but remains manageable on a personal laptop. However, users with more powerful machines can experiment with larger schemas for more realistic simulations.

Why Use Trino and TPC-H for Dashboard Performance Testing?

- Trino’s Speed and Flexibility – Trino can query massive datasets quickly, making it ideal for real-time analytics.

- Scalability – The TPC-H dataset offers different scale factors, allowing users to test performance under varying data loads.

- Realistic Business Simulation – The dataset models sales, orders, and customer transactions, making it suitable for dashboard performance tuning.

- Synthetic Data Generation – Since the dataset is generated on the fly, there is no need to manually input or collect business data.

Customizing the Setup for Different Hardware

If running queries on sf100 is too slow on your machine, you can switch to a smaller schema (like sf1) for quicker execution. Conversely, if you want to simulate a real enterprise environment, you can scale up to sf1000 for a more intensive workload.

By setting up Trino and selecting an appropriate dataset, we create an optimized foundation for building high-performance dashboards. The next step is to refine SQL queries and implement best practices to ensure fast and efficient data visualization.

Creating a dataset

Creating a Virtual Dataset in Apache Superset

To generate meaningful visualizations from our data, we need to structure it in a way that facilitates quick analysis and efficient querying. In Apache Superset, we can achieve this by creating a virtual dataset, which helps in organizing metrics and dimensions without modifying the underlying database structure.

A virtual dataset in Superset serves as a bridge between fact tables (which store measurable business data) and dimension tables (which provide context like time, geography, or customer segments). Instead of performing aggregations in SQL queries, Superset’s chart builder takes care of the computations dynamically, ensuring smooth dashboard performance.

Setting Up the Dataset

In this example, we will create a dataset named orders_by_market_nation. This dataset will link sales data from a fact table with relevant dimensions, such as market segment and nation. By doing so, we enable flexible analysis across multiple business aspects, such as:

- Sales trends over time

- Revenue breakdown by region

- Performance of different market segments

The SQL query behind this virtual dataset performs a JOIN operation between the main orders table and the necessary dimension tables. This setup allows us to filter, group, and analyze data efficiently without altering the database schema.

Why Use a Virtual Dataset in Superset?

- Performance Optimization – Since aggregations happen in Superset’s chart builder, the SQL remains lightweight, reducing query execution time.

- Flexibility – Users can modify, filter, and drill down into data dynamically without writing new queries.

- Seamless Data Exploration – Analysts can slice and dice metrics across various dimensions to uncover insights.

- Simplified Query Management – The same dataset can power multiple charts and dashboards without redundant SQL queries.

Key Considerations When Defining Metrics and Dimensions

- Metrics are aggregations (e.g., SUM(sales), COUNT(orders)) that help quantify business performance.

- Dimensions (e.g., order_date, market_segment, nation) allow grouping and filtering of data for better insights.

- Ensure Low Cardinality – High-cardinality dimensions (e.g., unique user IDs) can slow down queries, so choose attributes wisely.

By leveraging Superset’s virtual datasets, we enhance the efficiency of our data pipeline while maintaining flexibility for future analysis. The next step is to integrate these datasets into interactive dashboards that load quickly and provide real-time business insights.

Facts from the Article

Dashboard Performance is Critical – Users expect dashboards to load within 3.14 seconds, a concept introduced by IBM in the 1960s as a “nanocentury.”

Slow Dashboards Reduce Productivity – If a BI tool is slow, users may abandon it in favor of alternative methods, reducing its effectiveness.

Large Datasets Create Performance Bottlenecks – BI dashboards often query millions or billions of records, leading to potential slowdowns.

Optimized SQL Queries Improve Performance – Efficient queries (e.g., avoiding unnecessary joins and indexing columns) can significantly speed up dashboards.

Pre-Aggregated Data and Materialized Views – Instead of computing metrics on demand, storing pre-calculated data ensures faster access.

Caching Reduces Query Execution Time – Storing frequently accessed queries prevents repetitive calculations, improving dashboard response times.

Columnar Databases Improve Performance – Databases like Amazon Redshift and Google BigQuery are optimized for analytical workloads.

Asynchronous & Lazy Loading – Loading essential charts first while fetching secondary data in the background enhances user experience.

ETL Optimization Matters – Using efficient data formats (e.g., Parquet, ORC) and proper indexing speeds up retrieval.

Trino & TPC-H are Ideal for Benchmarking – Trino serves as a high-performance SQL engine, and TPC-H provides scalable synthetic data for realistic simulations.

Virtual Datasets in Apache Superset – Superset enables data modeling without modifying the database structure, improving query flexibility.

Metrics and Dimensions Define Dashboards – Metrics use aggregations (SUM, COUNT), while dimensions (order_date, market_segment) allow deeper analysis.

Final Word

Optimizing Business Intelligence (BI) dashboards is essential for maintaining a seamless user experience. Slow dashboards reduce efficiency and limit decision-making capabilities. By implementing best practices such as query optimization, caching, columnar databases, and pre-aggregated views, organizations can achieve sub-four-second dashboard load times.

Apache Superset provides a powerful platform for creating virtual datasets, enabling users to slice and dice data without altering the database schema. Tools like Trino and TPC-H facilitate realistic performance trying out, making sure that dashboards stay scalable and responsive.

With strategic statistics modeling and overall performance tuning, companies can transform uncooked records into actionable insights, empowering selection-makers with rapid, interactive, and excessive-overall performance BI dashboards.

FAQs

1. Why do BI dashboards become slow over time?

BI dashboards slow down due to increasing data volume, inefficient SQL queries, lack of caching, and poorly optimized data models.

2. How can I improve my BI dashboard performance?

Use optimized SQL queries, pre-aggregated data, caching mechanisms, columnar databases, and efficient ETL pipelines to improve performance.

3. Can Apache Superset generate synthetic data?

No, Apache Superset does not generate synthetic data. However, tools like Trino with TPC-H can provide synthetic datasets for testing dashboard performance.

4. What is a virtual dataset in Apache Superset?

A digital dataset in Superset is a pre-defined question that joins truth and measurement tables, taking into account dynamic filtering, grouping, and cutting of records in a dashboard.

Five. What is the benefit of the use of pre-aggregated information?

Pre-aggregated statistics reduces the time wanted for query execution due to the fact that aggregations are already computed, main to faster dashboard load instances.

6. What position does a columnar database play in BI overall performance?

Columnar databases (e.G., BigQuery, Redshift) optimize analytical queries by storing records column-clever as opposed to row-smart, reducing experiment instances and enhancing question performance.

7. What is the high-quality manner to structure BI statistics fashions?

Using star or snowflake schemas minimizes redundant computations and improves dashboard responsiveness.

8. Can I use Trino to handle large datasets for BI dashboards?

Yes, Trino is an excellent SQL engine for handling large-scale analytical queries efficiently, making it suitable for BI dashboards.

9. What is lazy loading in BI dashboards?

Lazy loading prioritizes critical charts first, while other visualizations load in the background, enhancing the user experience and dashboard interactivity.

10. How does caching help with dashboard performance?

Caching stores previously executed queries, eliminating redundant calculations and drastically reducing dashboard load times.

Read More About Information At: Discovermindfully